Deployments at scale for Django framework projects

Building deploys that are ready to scale.

For this post, I must set an app scenario. For sampling purposes imagine that our application stack has:

- A backend application majority built with Django and Django Rest framework;

- A frontend application majority built with Django and Vuejs;

- A Mobile application that consumes the backend;

- A BFF structure built on top backend rest API;

Before we can go on, I must say something about this article's scope.

For understanding purposes, this is a minimal architecture for growing projects over WSGI servers. I will not talk about tech details step by step, but I will try to ensure that I talk about concepts first. Please feel free to expand those concepts, reduce them, or do anything else on your way.

Scope defined, let's go there!

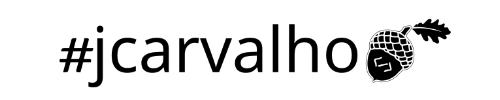

Drawing everything:

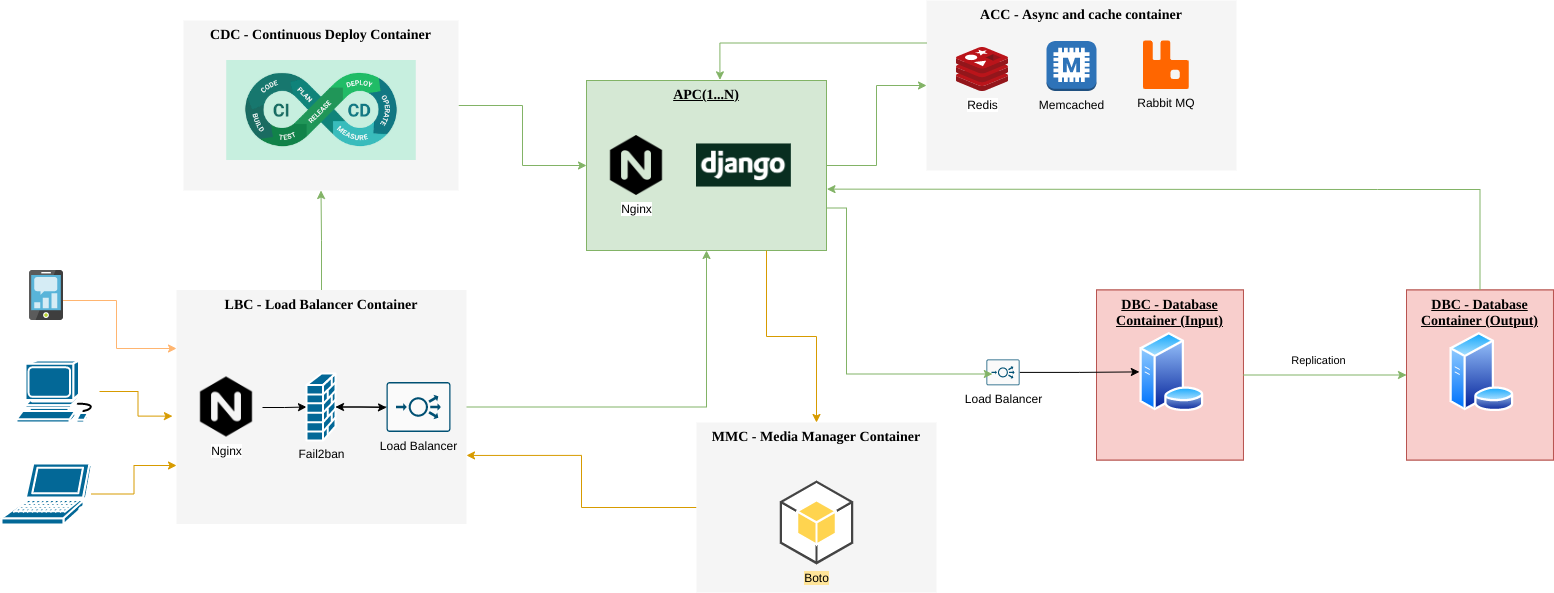

LBC - The load balancer container:

Here all requests will hit, for obvious purposes, this container must be the only access point from the outside dangerous world

In common ways, this container only accepts incoming connections from:

- 80 port for HTTP access. This port is needed for redirecting to SSL handshaking connections

- 443 port for HTTP access over SSL;

So, let's check out the software stack's main roles:

Nginx: Powerful web server for static files serving, mapping WSGI access when needed, and acting as a balancer for each application container;

Fail2ban: Search for malicious expressions in requests log files to identify crawlers, spiders, and bots. Check for invalid authentication and do a ban action for malicious requests;

UFW Firewall: Do the action for fail2ban denying access when needed for malicious requests origins;

When you need to scale this container, usually a vertical scale solves your problems for many thousands of requests more.

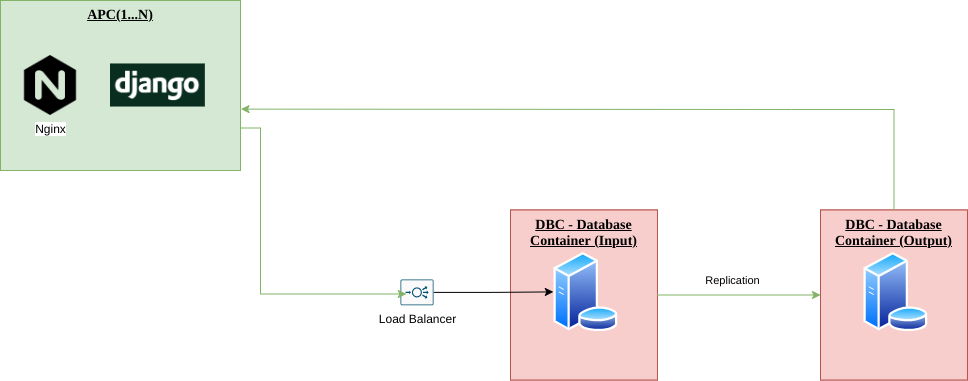

APC - The Application Container:

For understanding purposes, observe that all requests reachable from the outside world are drawn in orange and all requests from our system running over the private network are drawn in green.

The request connection from the LBC container to the application container runs over the HTTP 80 port on the private network (VPN).

Here your Django app runs over a WSGI server, (Gunicorn or uWSGI are common servers for that). The resulting SOCK file is mapped to the APC Nginx server to be accessed from LBC requests.

When you need to scale this container, a horizontal scale works like a charm! Just inform the new APC access point to your LBC and voilá! You will have one more APC container to process the incoming requests.

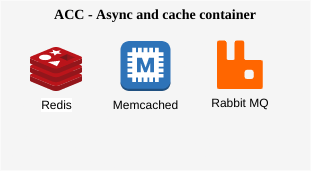

ACC - The async calls and cache container;

Any modern app needs at some moment to cache some parts of itself or to execute async tasks. This container has some software alternatives to do those jobs.

Redis: Good for caching purposes and to handle MQTT async tasks;

Memcached: My favorite choice for caching;

RabbitMQ: An excellent and high-speed MQTT server;

ElasticSearch: Good for caching and speeding up constant queries;

Each APC container calls those ACC services for caching and async tasks purposes. Usually, the APC container doesn't need to scale;

MMC - Media Manager Container:

Media files as a service are an important concept for modern applications. With Django, the BOTO app can access AWS S3 object storage or even your custom MINIO instance. The most important here is to monitor disk usage and, of course, regular backups and CDN usage. (Especial thanks to Cloudflare)

DBC - Database Container:

Most database servers can be tuned for write and read operations, meanwhile, they cannot be tuned for both of them. It's usually a good idea to have two or more database servers replicated in master -> slave schema and perform write operations to the master database server while performing read operations to slave database servers. Django applications can efficiently handle different servers with the "Database Router" feature.

Another good point for replication is to let a database server replica outside geographically to this system receiving data updates and being ready to recover from disasters.

CDC - Continuous Deploy Container

Continuous deploy container will receive any commit information from our git repositories and perform on any known application container a trigger for application deploys itself. This container allows any push to the master branch will be deployed with no human interaction.

That's it!

Starting a WSGI application deployment in the right way can reduce issues in projects that need to gain scale. All pieces here allow growth in a mature project's cycle of life but, of course, there are many approaches to do a better deployment and I would like to hear about them from you in the comments.